COCO spliced datasets

We utilized the COCO dataset to generate a manipulated dataset. Given that the dataset comes with provided labels (masks), we initially identified the desired portions in the original images by applying the mask to them. Subsequently, we used these specific regions to manipulate other images. Each image was altered with approximately 8 to 10 objects, resulting in a total of around 900k manipulated images.

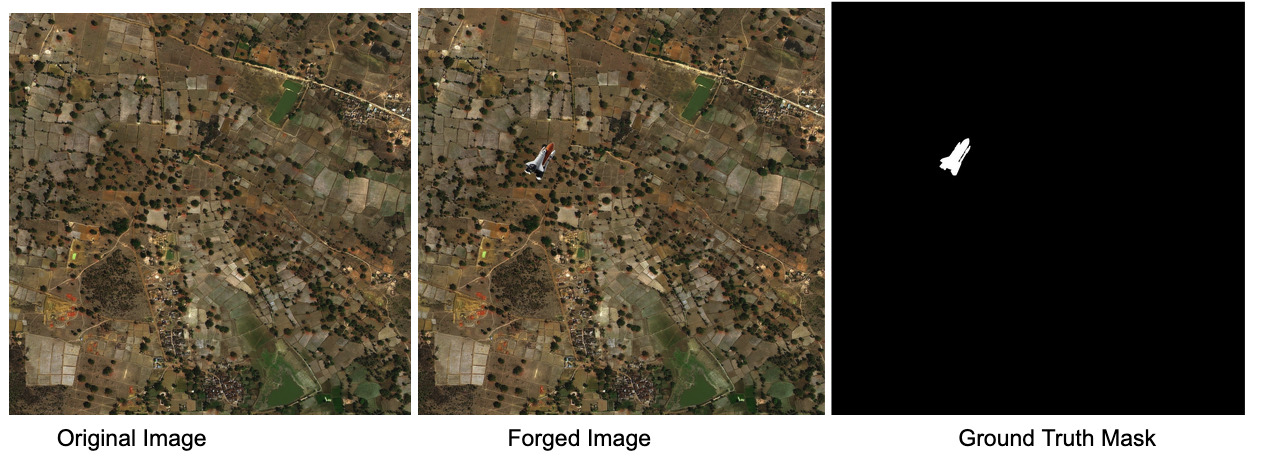

Satellite Forgery Image Dataset

We used DeepGlop dataset to create Satellite Forgery images by following the method proposed in Deep Belief networks. A total of 293 orthorectified images with an image resolution of 1000×1000 pixels were collected. We use 100 of the 293 orthorectified images to create manipulated images. 19 different objects are spliced into the 100 images generating a total of 500 manipulated images with their corresponding manipulation ground truth masks. The 19 objects include rockets, planes, and drone images. The figure shown below illustrates some examples from the manipulated dataset.

RWDF-23 Dataset

The RWDF-23 is collected from the wild, consisting of 2,000 deepfake videos collected from 4 platforms targeting 4 different languages span created from 21 countries: Reddit, YouTube, TikTok, and Bilibili. By expanding the dataset's scope beyond the previous research, we capture a broader range of real-world deepfake content, reflecting the ever-evolving landscape of online platforms. Also, we conduct a comprehensive analysis encompassing various aspects of deepfakes, including creators, manipulation strategies, purposes, and real-world content production methods. This allows us to gain valuable insights into the nuances and characteristics of deepfakes in different contexts. Lastly, in addition to the video content, we also collect viewer comments and interactions, enabling us to explore the engagements of internet users with deepfake content. To obtain the dataset, please fill out the form HERE.

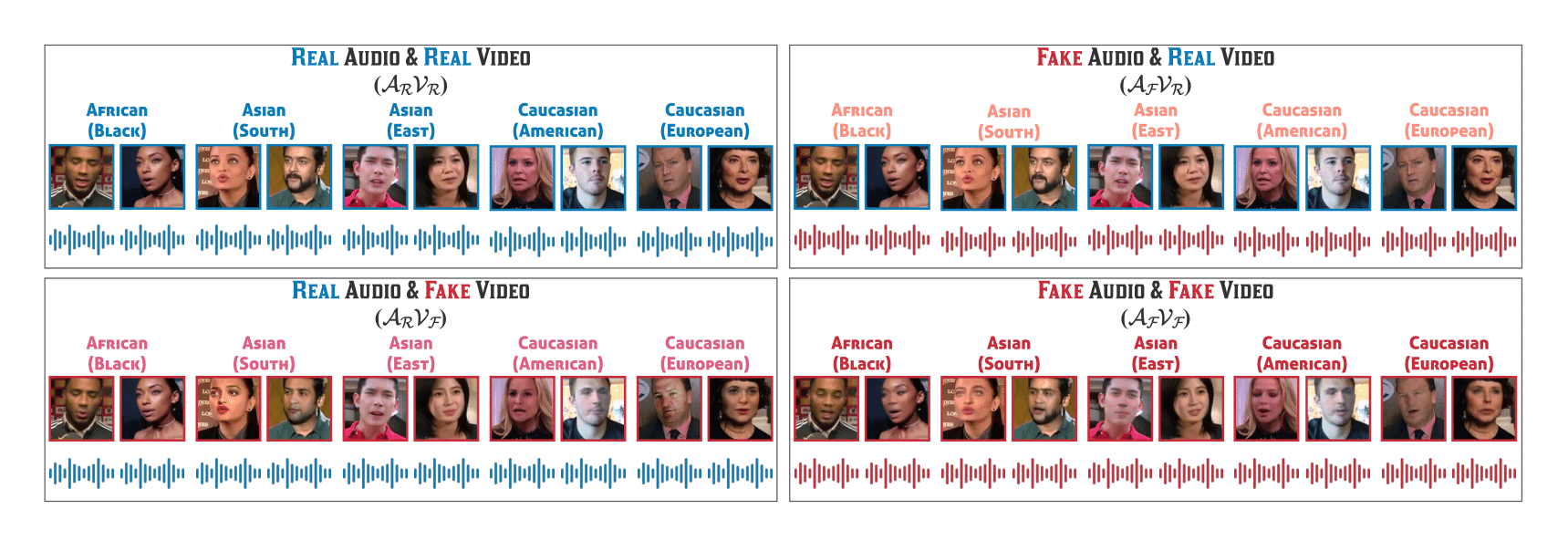

FakeAVCeleb Dataset

In FakeAVCeleb, we propose a novel Audio-Video Deepfake dataset (FakeAVCeleb) that contains not only deepfake videos but also respective synthesized lip-synced fake audios. Our FakeAVCeleb is generated using recent most popular deepfake generation methods. To generate a more realistic dataset, we selected real YouTube videos of celebrities having four racial backgrounds (Caucasian, Black, East Asian, and South Asian) to counter the racial bias issue.

VFP290K dataset

Vision-based Fallen Person (VFP290K) dataset consists of 294,714 frames of fallen persons extracted from 178 videos from 49 backgrounds, composing 131 scenes. We empirically demonstrate the effectiveness of the features through extensive experiments comparing the performance shift based on object detection models. In addition, we evaluate our VFP290K dataset with properly divided datasets by measuring the performance of fallen person detecting systems. We ranked first in the first round of the anomalous behavior recognition track of AI Grand Challenge 2020, South Korea, using our VFP290K dataset, which can further extend to other applications, such as intelligent CCTV or monitoring systems, as well.

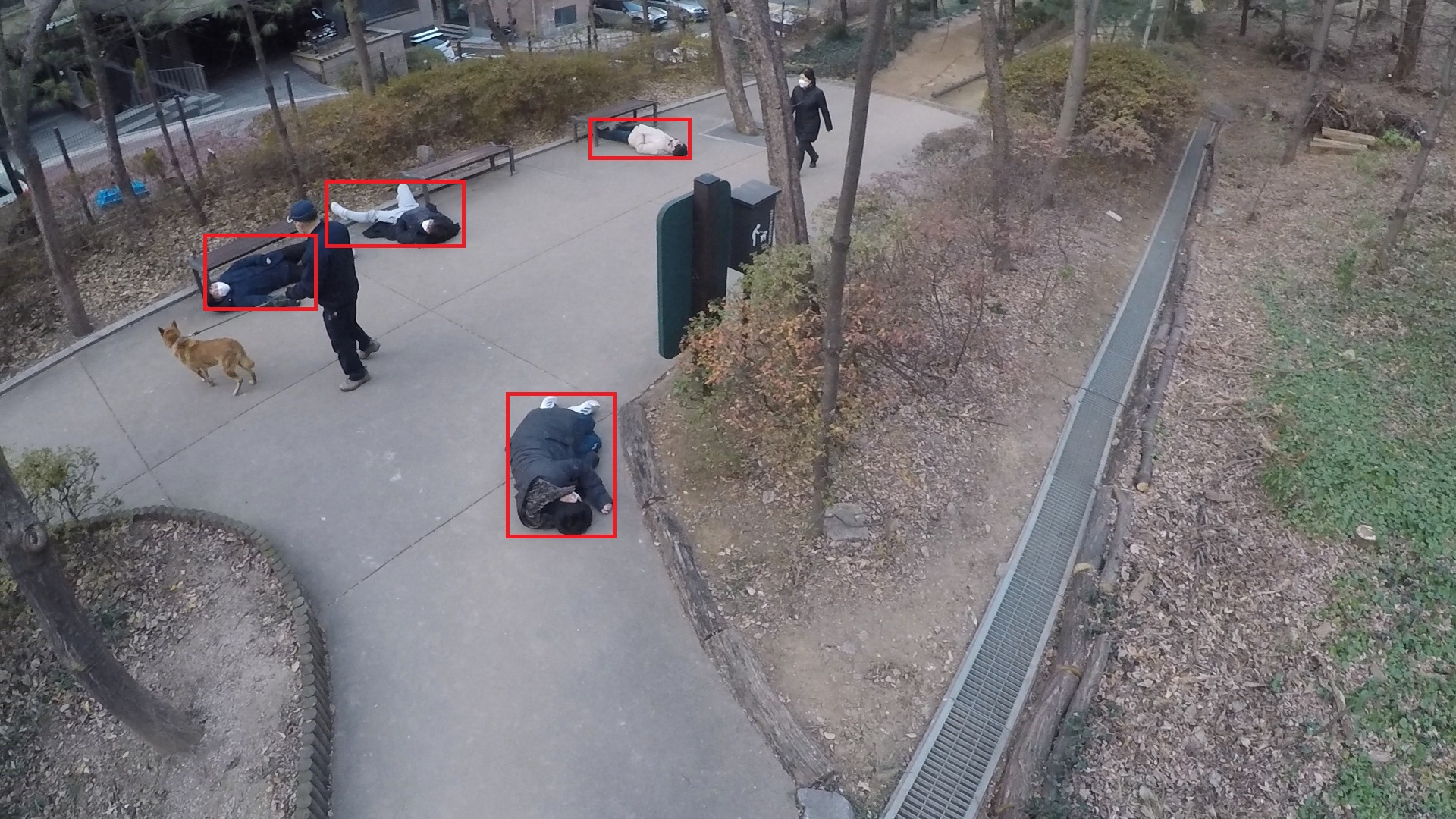

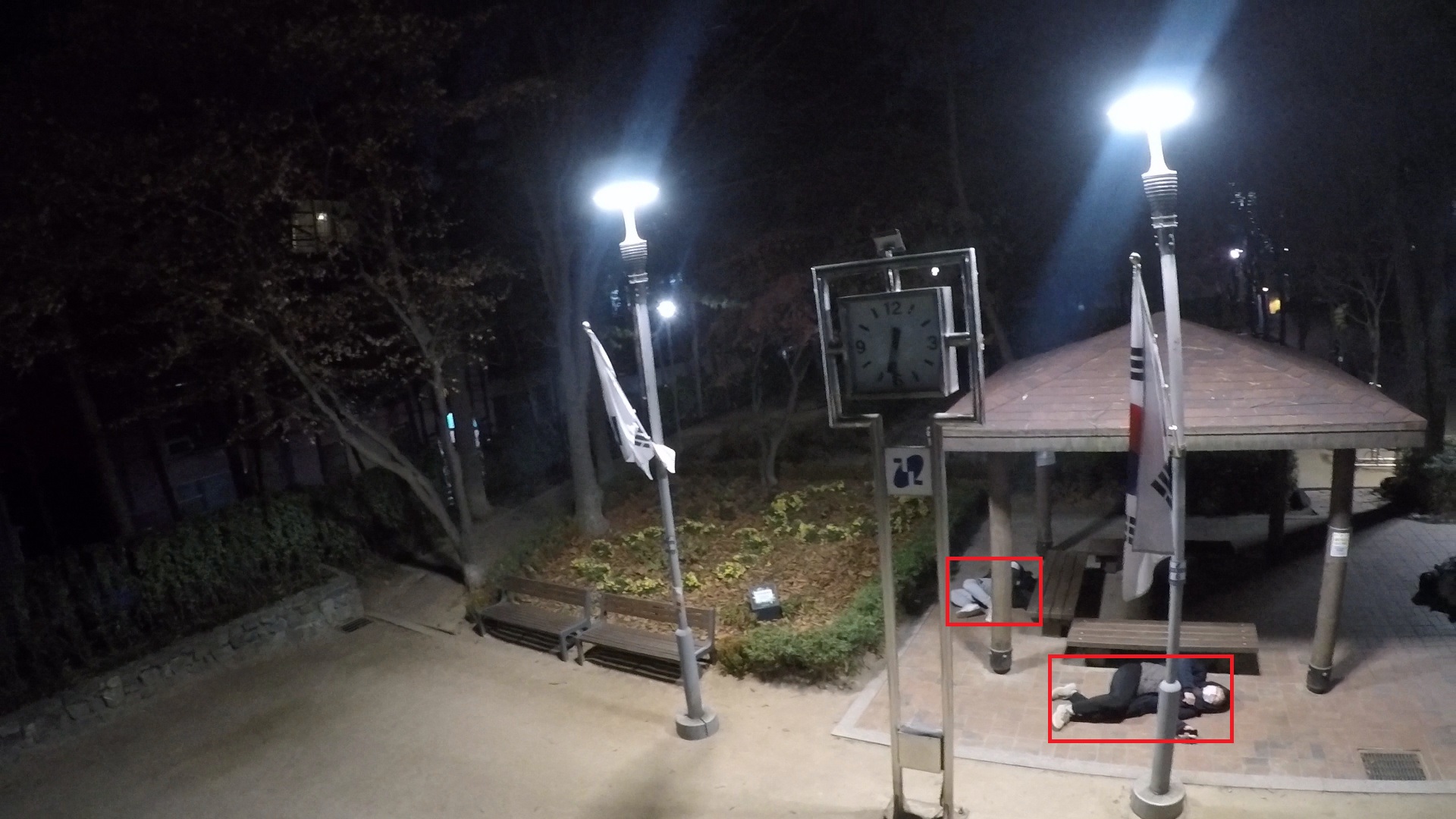

SKKU AGC Anomaly Detection Dataset

SKKU AGC Anomaly Detection Dataset was acquired with a stationary camera mounted at an elevation, overlooking pedestrians, both day and night from various locations. Abnormal event is when a person's head touches the ground. The data was split into detection data and classification data.

1. Detection Data

It consits of images and anomaly labels. Images(1920x1080) are in day and night folders. Labels(.xml files) are in day_anno and night_anno folders.

day: 3000

night: 2000

2. Classification Data

Images cropped only by humans. There are two classes, normal and falldown. Images are in normal_day, normal_night, falldown_night, and falldown_day folders.

normal_day: 3200

normal_night: 1300

falldown_day: 3700

falldown_night: 900

Examples of Detection data

|

|

|

|

|

|

|

|

Examples of Classification data

|

|

|

|

|

|

|

|

Deepfake Inspector

Try our beta version here: Tool

.